Last week, I attended ROS World 2021, a virtual ROSCon, after this year’s event in the USA was cancelled. Like the actual ROSCon, ROSWorld is a three day (including workshops) event with multiple keynotes, panel discussions and talks about ROS/ROS2, a crazy amount of ROS based companies advertising their newest products, and finally - really cool and like-minded people. While a lot of people did complain on social media about ROSWorld being virtual, it was definitely a plus for me because I couldn’t have attended otherwise. This was kinda my first ROSWorld, although I attended in 2020 but attended only a couple of talks. Here are some of my experiences from this year’s event:

The day before ROSWorld actually started, I attended a pre-conference workshop organized by Unity. The instructors taught us how to load a simple navigation stack example using ROS2 and Unity - including setting up the environment, setting up the robot and finally simulating a multi-robot navigation demo with multiple Turtlebot3 robots, and one Husky platform with a UR5 arm. They also talked about their robotics visualization package, that’s not only being used in robots but also in video games with simulated robots, such as Mars First Logistics. I cannot wait for this game to come out, as I’m already quite addicted to Surviving Mars. Other than the incredible ways its being used, this complete application built from scratch gives me a great starting point for what I originally intended to do - drive a simulated AKROS robot in a Unity 3D model of Stratumseind in Eindhoven. I found this 3D model on Eindhoven city’s open data portal, which was definitely my best takeway from this years Maker Faire Eindhoven. Its an incredible portal with all kinds of datasets, and I hope other cities also open source their datasets like this..Coming back to the workshop, here are a few short video:

Things don’t always go right. The demo was definitely not the most robust application (since it was just a demo of Unity’s features), plus I also messed up some steps, leading to the robots having some ‘accidents’… Glad this was only in simulation, and not implemented on actual robots out in the field.

Finally after catching up with the instructors, I managed to get the robots to do their jobs, barely.

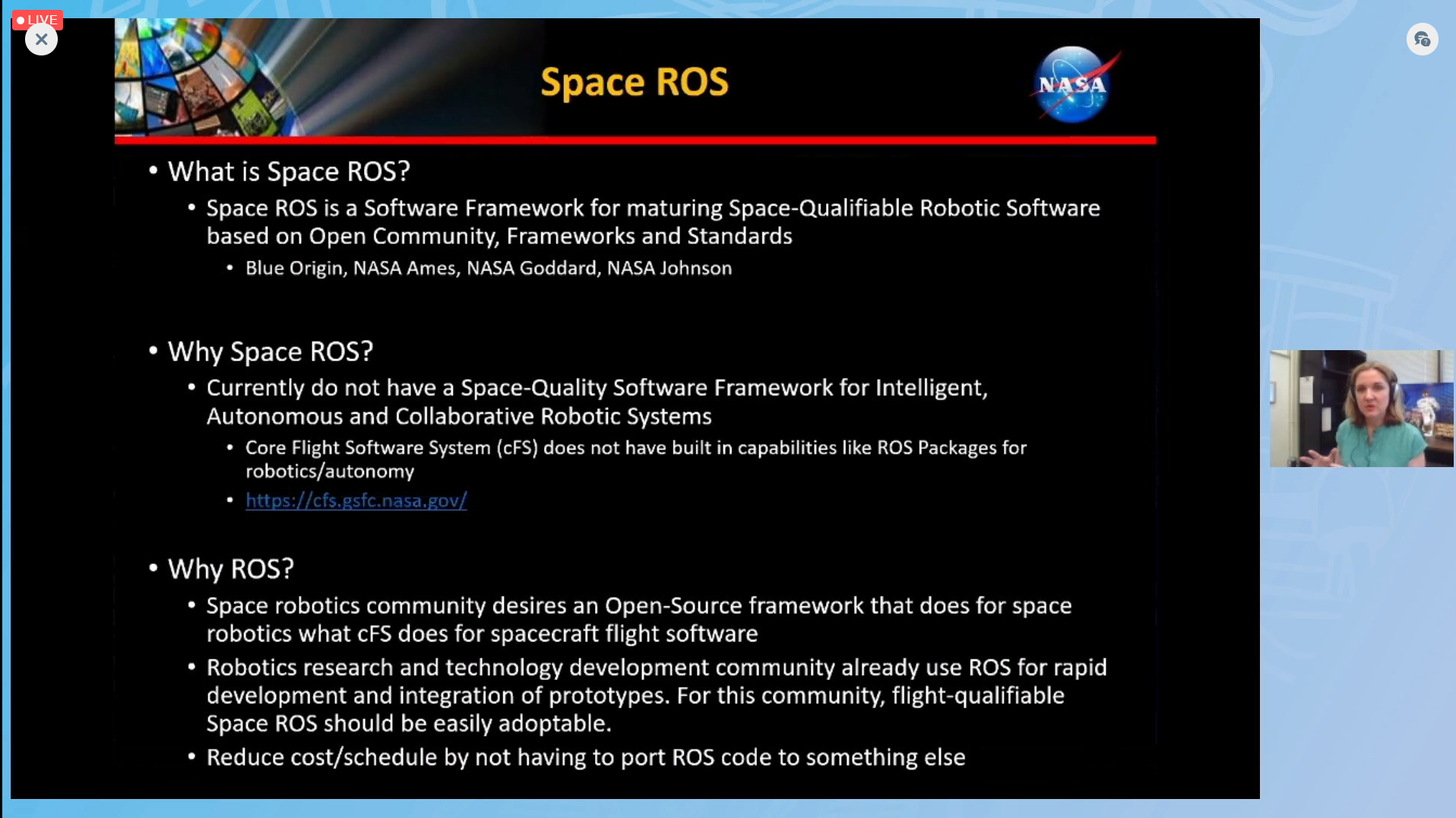

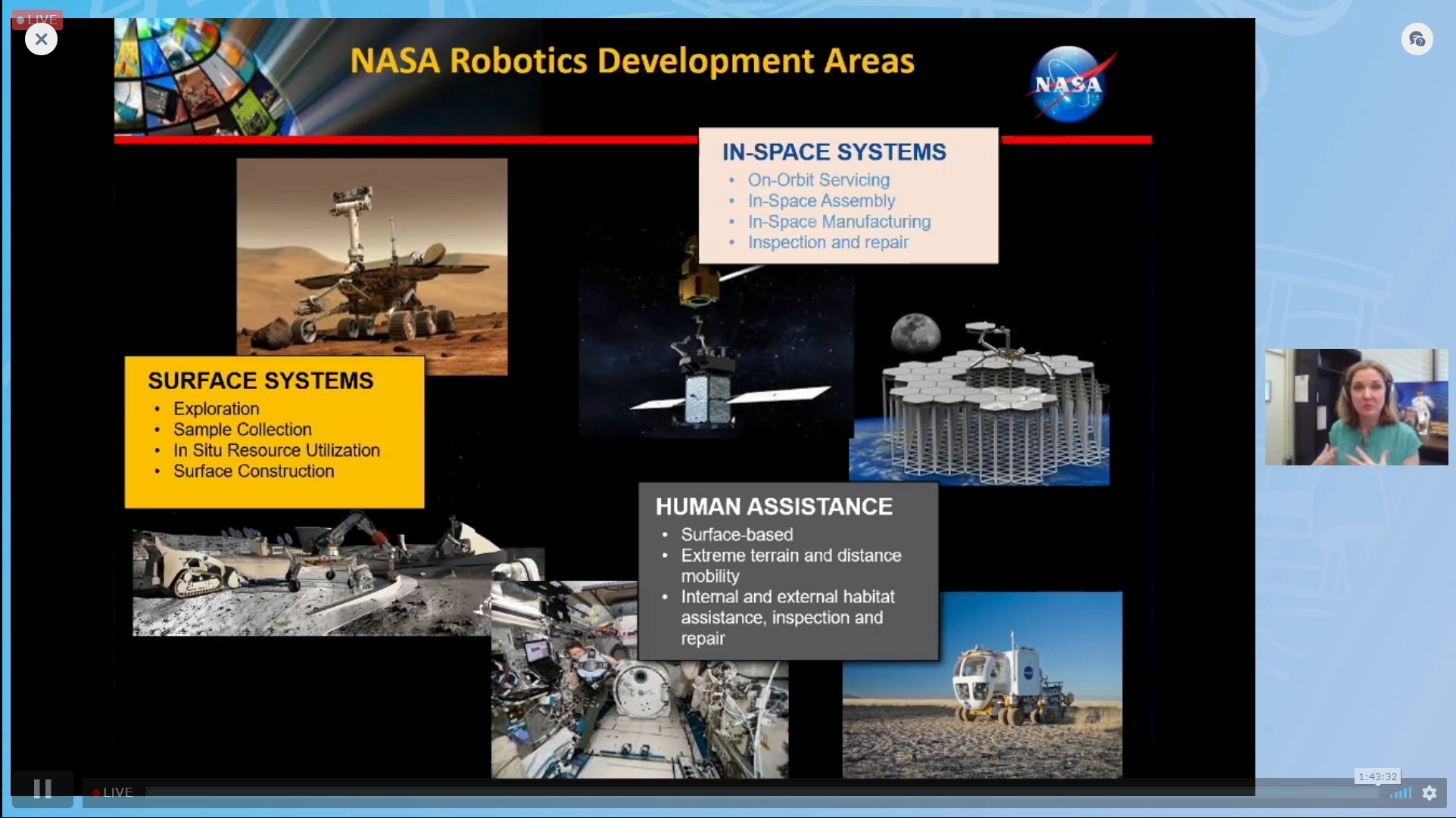

The next day (technically, next evening given my time zone), ROSWorld was kicked off. The keynote was given by Kimberly Hambuchen, chief of robotics at NASA Johnson Space Center, where she talked about how NASA has been using ROS in space applications and how they are working towards standardizing ROS based software for space. One of these applications Kimberly talked about, and that caught my eye was Affordance Templates, a general purpose representation of tasks for robots that was built by NASA and TRACLabs. I also found out that one of my favourite robots, Astrobee (a floating robot in the International Space Station) runs ROS… how cool! She also talked about the importance of ‘space qualified’ systems, and the necessity of a framework to qualify ROS based systems (hardware/software) for space. The biggest announcement was a project called Space ROS initiated by NASA and Blue Origin. As someone mentioned in the chat, we’ve already got ROS Galactic which is a much cooler name for Space ROS. I am really curious about how this project shapes up and keen to try out the new developments in this space. I believe this might open up the space robotics industry to a much bigger audience, and I wonder what Elon Musk comes up with..

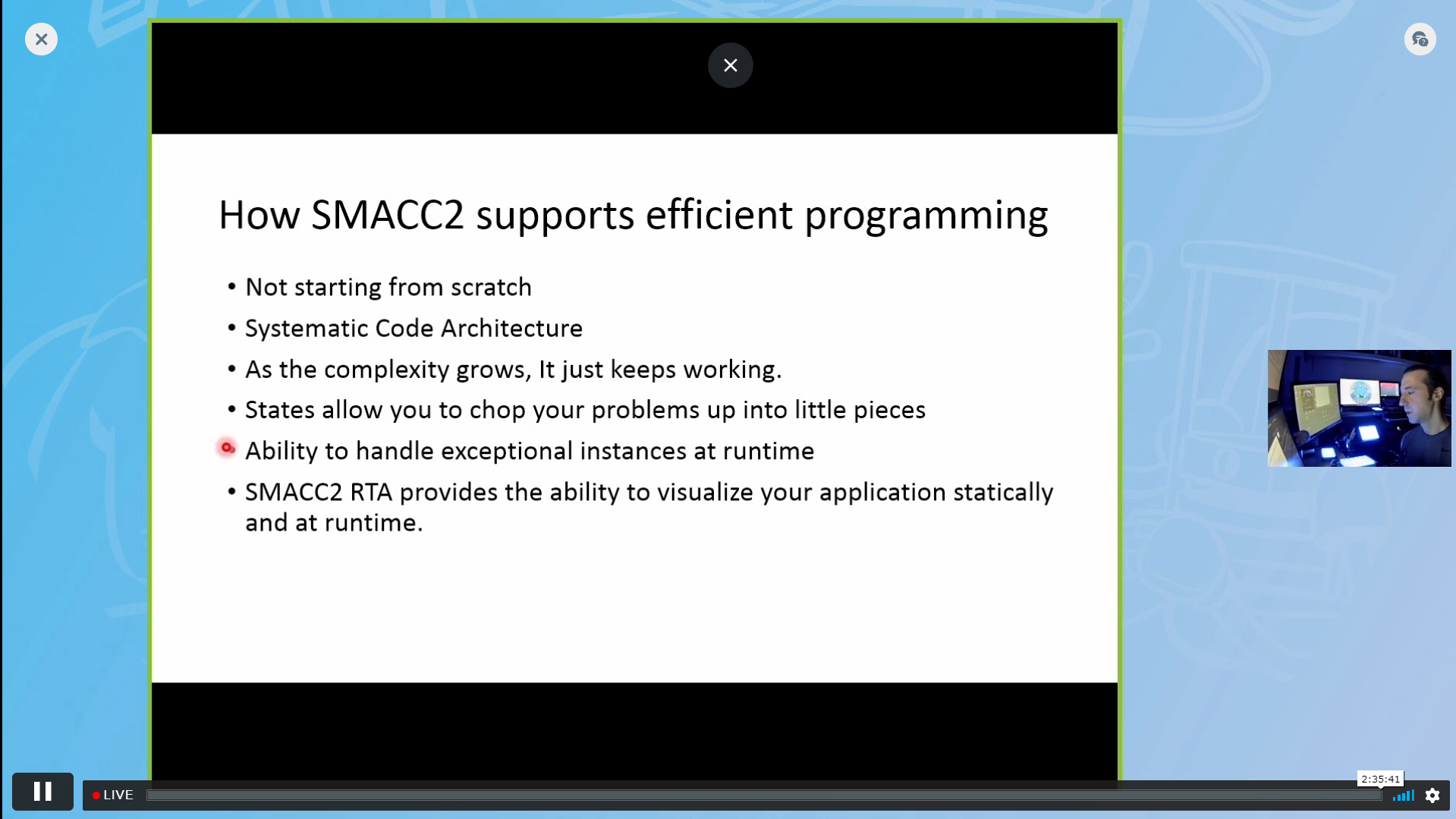

Another one of my favourite talks from day 1 was about SMACC2, a ROS2 version of SMACC - an asynchronous state machine library written in C++. The presenter talked about the excellent features of this library, with demos and examples of robots in the wild using SMACC2. This was definitely useful for me since I have been intending to use the SMACC library but I was waiting to get everything from AKROS ported to ROS2 first..

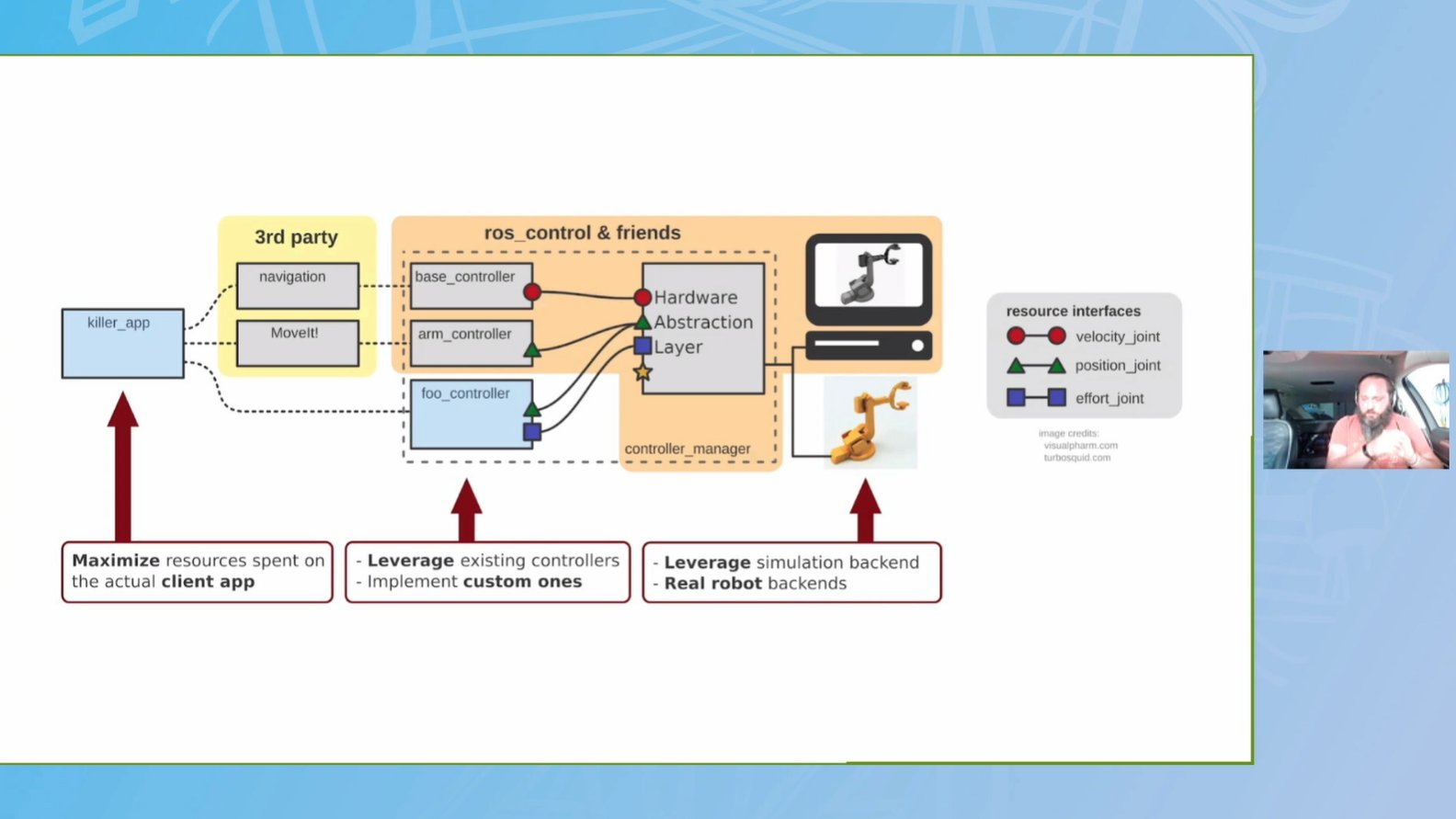

On day 2 of the conference, I decided to chill out a little bit because I was getting a little exhausted after having worked in the morning and attended the Unity workshop/ROSWorld in the evening the last two days.. While I did skip a lot of sessions, I did enjoy watching the Lightning talks - essentially a show-and-tell by researchers and ROS developers, where I learnt about some cool things like rosboard - a web based GUI server running on the ROS machine. I was definitely quite curious about ros_control/ros2_control, definitely an efficient way of designing and managing low level controllers. I cannot wait to try this out in ROS2. But without a doubt, my favourite session of day 2 was a talk by this high-school First Robotics Competition (FRC) team called Zebracorns. They talked about how they’ve been using ROS to implement things like particle filters for localization. When I was in high school about 11-12 years ago, the most I could do was program while loops and if/else conditions for a simple robot to move, and these kids were building (quite successfully) things that I only learnt as an engineering student.. Crazy!

Other than these few sessions, I mostly spent my time doing the collaborative puzzle. Definitely one of the harder jigsaw puzzles I’ve done, and even harder because the pieces are on a screen. I kept going back to this puzzle during the day, and I was quite lucky to have been playing when we finally finished the puzzle!

Other than sessions and puzzles, a few products were also launched at ROS World. NVidia showed off their Isaac simulator SDK update and Luxonis teased their OAK-D Pro version. I’m definitely waiting for the OAK-D Pro to launch - its essentially an OAK-D with an IR laser for active depth. I hope they keep the IMU and not remove it like the OAK-D Lite. The best launch, in my opinion was the iRobot Create 3 - an educational roomba-like platform with all the sensors a roomba has, but running ROS2. Quite coincidental, since I was looking at the Create 2 robot a few days earlier and thinking of buying one. Create 3 would be an excellent platform to try out my AKROS navigation module, both in simulation using Gazebo/Ignition/Unity and IRL. Clearpath Robotics took this a step further and added a RPi4, a Lidar and a depth camera to make the Turtlebot4 platform. Definitely the righful successor for the Turtlebot2, since the Turtlebot3 Waffle and Burger robots are a completely different looking platform. Finally, the big announcement about ROSCon 2022 - I really enjoyed ROSWorld and was really excited about an in-person event and hoping that it would be closer to Europe - but ROSCon 2022 will be held in Kyoto, Japan! While its definitely not close to Eindhoven, Japan is a country I’ve wanted to visit after graduating, but I had to hold off on my plans because of Covid-19. I guess 2022 is finally the year I visit this incredible country!!

For the coming week, there are a few things I still need to finish before I take the plunge and start porting everything to ROS2. Firstly, I need to tune and configure move_base and the local/global planners to work optimally with the AKROS platform. Next, I need to fix a few bugs/warnings and clean up the launch files. I also need to cleanup the CMakeLists.txt and package.xml files because there are quite a few redundant dependencies there. Finally, I need to re-attach the OAK-D and re-calibrate it. I had originally planned on switching to the OAK-D Lite, but I soon realized that it didn’t come with an IMU and I’d still need an extra port to provide extra power to the device (since the RPi4 USB hub provides only 1.2Amps of current in total). Since the DepthAI ROS package has been updated to provide IMU access among other features, its currently a better device to implement visual inertial odometry with using rtabmap_ros and robot_localization, unlike the OAK-D which has the same cameras but no IMU. I’m still eagerly waiting for the OAK-D Lite though, as it can now go to the project I planned on using the OAK-D with. This week, I also plan on replacing my RPi4 4GB with a RPi4 8GB. I’m sure this is an improvement but I hope it solves the controller frequency (move_base) and sync (rosserial_arduino) issues I’ve been having. More updates next week.