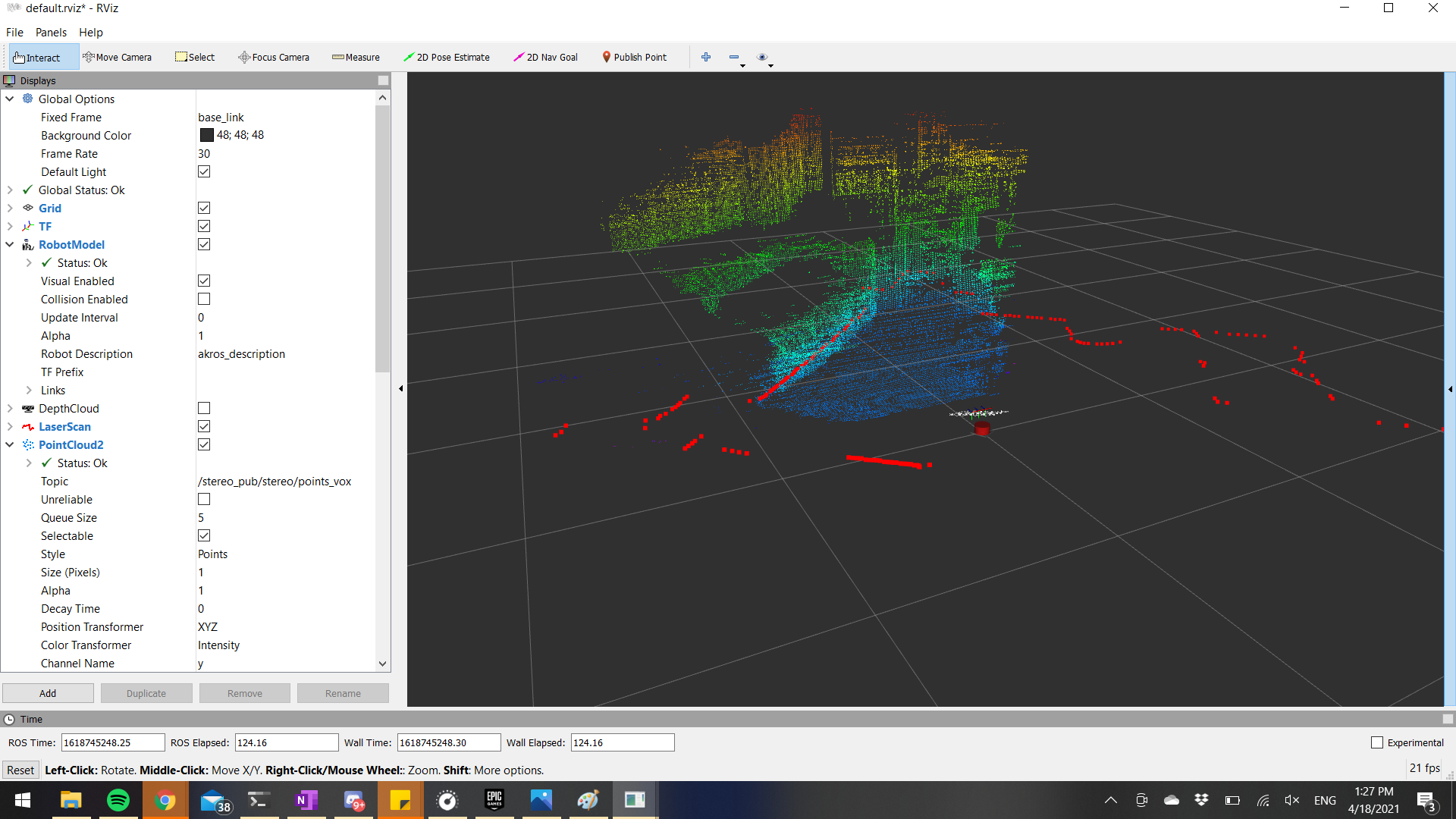

In the last update, I had setup the required nodes to publish a depth image and convert that into a pointcloud message. This weekend, I worked towards filtering the noise from this depth pointcloud. I started by playing with the Point Cloud Library (PCL) and the ROS nodelets provided by it. To cleanup the pointcloud data, I first ran it through an SOR (statistical outlier removal) filter after which I downsampled the output using the VoxelGrid filter. I also ran the laser scan message through some filters, which filters out values with an angle range (from behind the laser scanner, which we do not want to use). The resulting filtered pointcloud and laserscan outputs can be seen below:

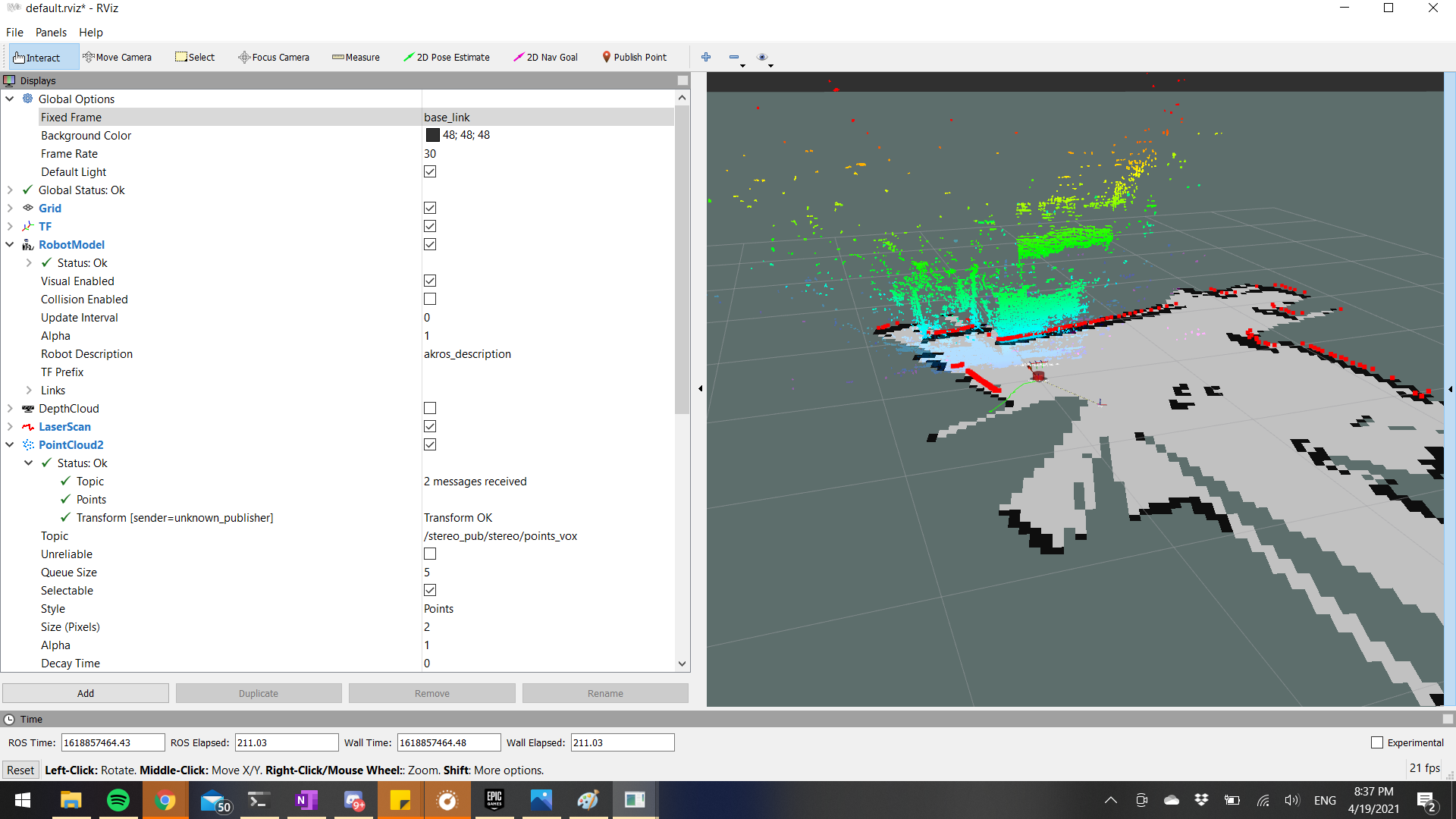

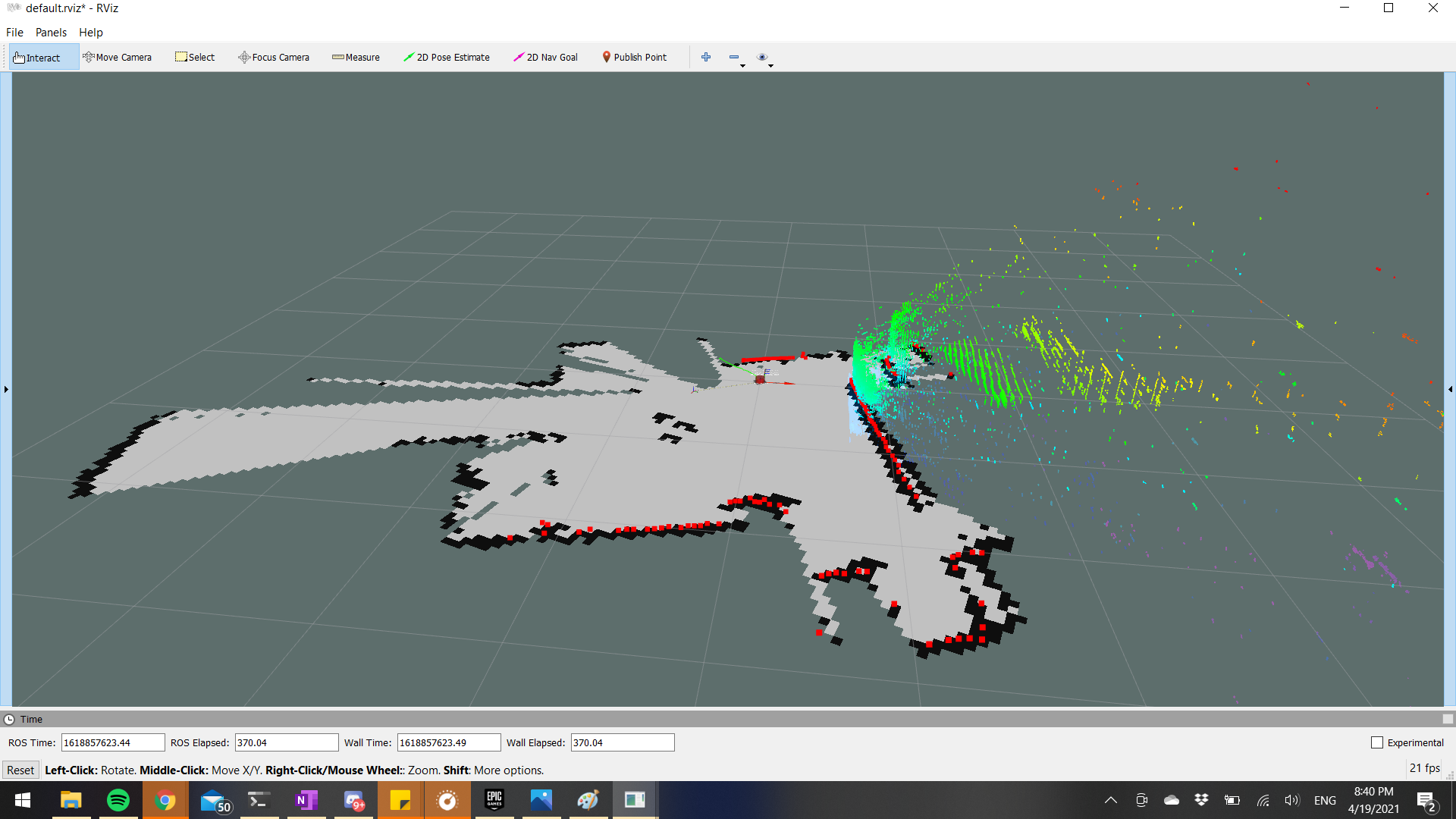

Once I had the laserscan and the pointclouds filtered according to my requirements, I set up Hector SLAM using this tutorial I found on Youtube. With the default setup, I now have a rudimentary mapping/SLAM application which works sufficiently well. Following images show the outputs of the Hector SLAM application on RViz.

I still need to finish working on the depth-RGB alignment using the interfaces provided by Luxonis. I will need some help from their discord group for this, so I’m not planning on completing it this instant. Instead, I will try to tune the Hector SLAM parameters to get even better performance, and meanwhile I also plan on spending some time implementing some mobilenet applications using DepthAI and ROS. More about this in the next post.