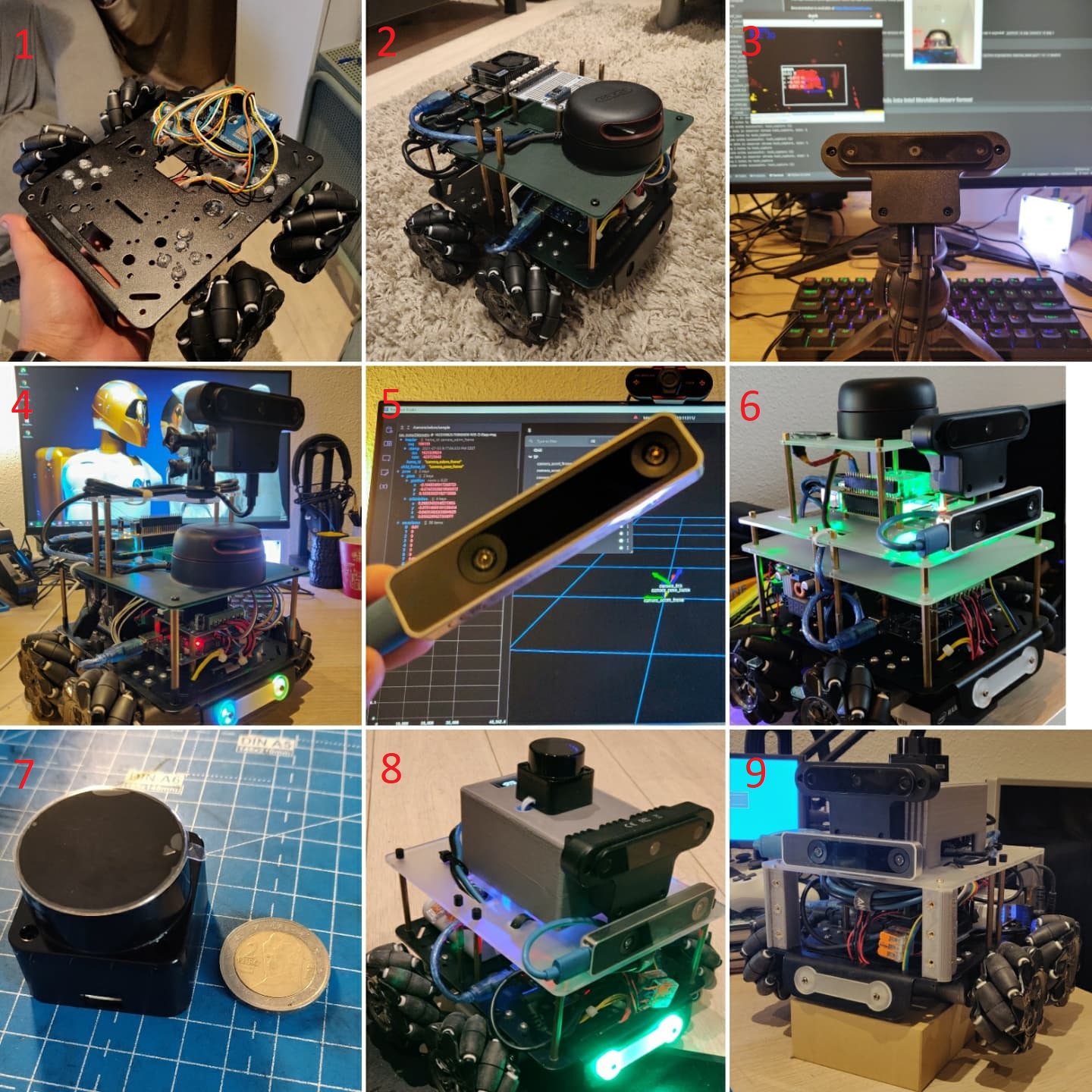

2021 has been a personal highlight for me as I stuck with one side-project throughout the year instead of scuttling between multiple projects. Unlike in 2020, where I was just experimenting with rapid prototyping tools and ROS packages, 2021 started with a plan - to build an autonomous ROS robot with mecanum wheels (for omnidirectional motion), visual-inertial odometry, and the ROS 1 navigation stack. I started off with an off-the-shelf omniwheel chassis I had purchased a few years earlier, and over the year I built on top of that, adding/replacing sensors, designing and prototyping mechanical parts, and developing/configuring the ROS software packages. In the course of this pose, I will go into more detail about the different stages of my progress. Warning: this is a very long post.

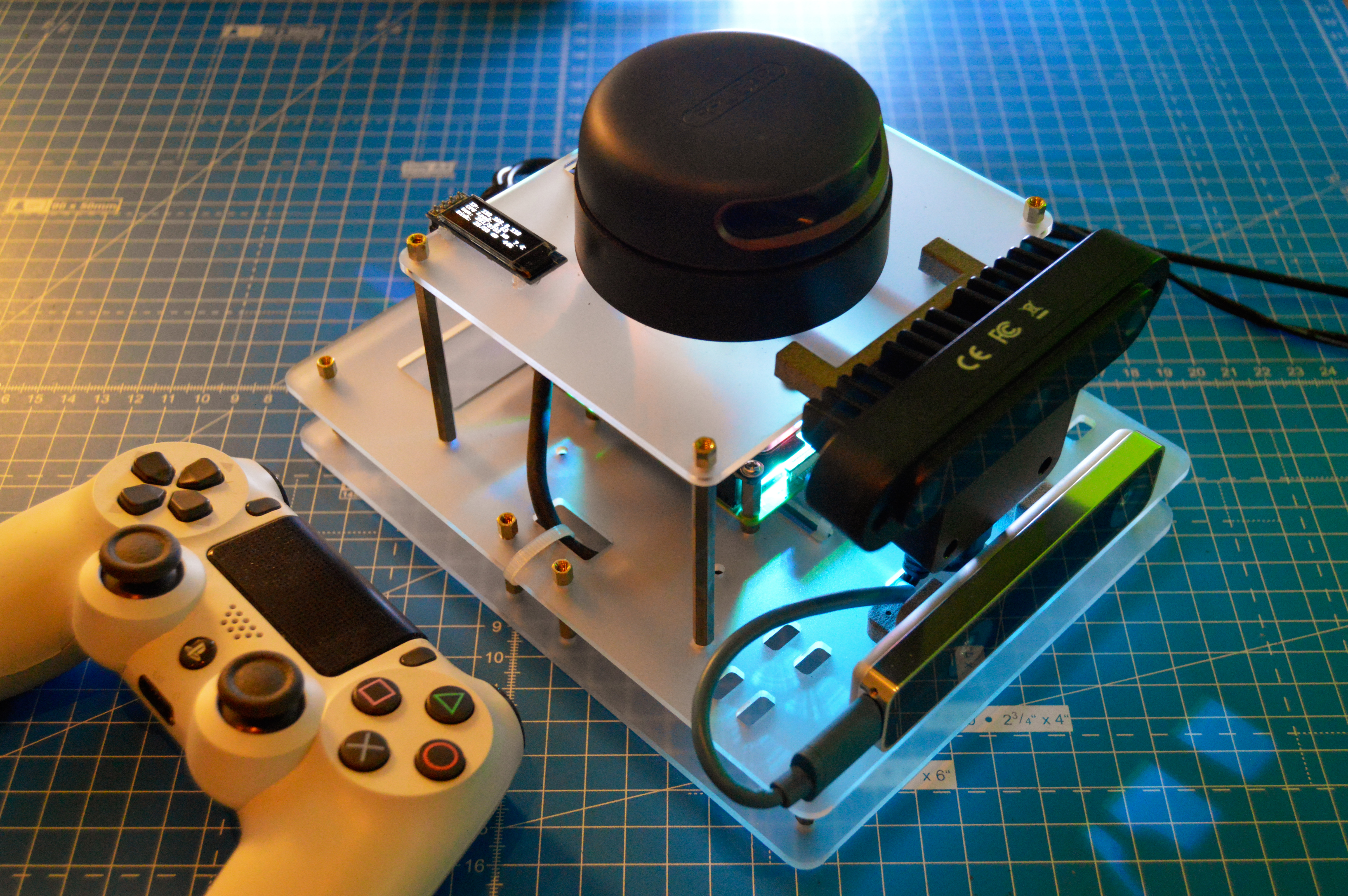

Starting off: I started off in January 2021 with a bare meccanum wheel chassis, and an ambition to build off on top of it. At the end of 2020, I had used the innovation budget provided by my employers to purchase a RPLidar A2 and a Raspberry Pi 4 (4GB). I had also pre-ordered an OAK-D camera from the successful Kickstarter campaign by Luxonis, and I was expecting to receive it early in 2021. I first designed a base-plates for the OAK-D, the RPLidar and the RPi4 and got them laser cut from Snijlab. Snijlab is really cool and very convenient service for on-demand laser cutting in the Netherlands, and have manufactured most of the laser cut parts in this project. These laser-cut me an indication of how the robot was going to look, and how all parts fit together. The base plate also divided the robot into distinct parts - the base module with the motors, the battery and the motion control electronics (Arduino, motor driver, emergency-stop button - all to be added), and the navigation module with the Raspberry Pi, the OAK-D and the RPLidar. In March 2021, once I was done designing the parts, setting up ROS Noetic on the Raspberry Pi and testing the RPLidar, the OAK-D finally arrived and I was able to put everything together first time. Now, I could start playing with this new camera.

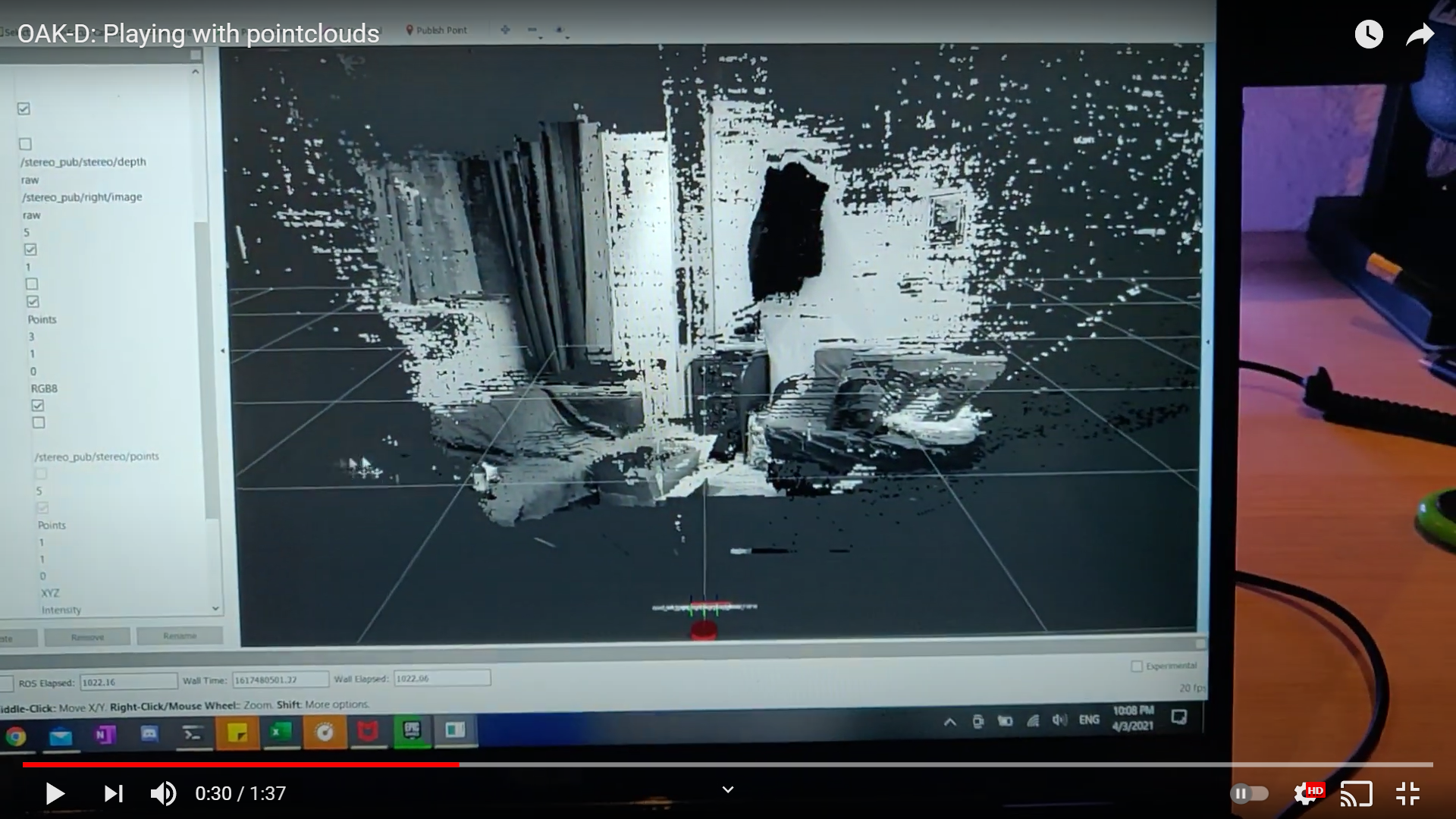

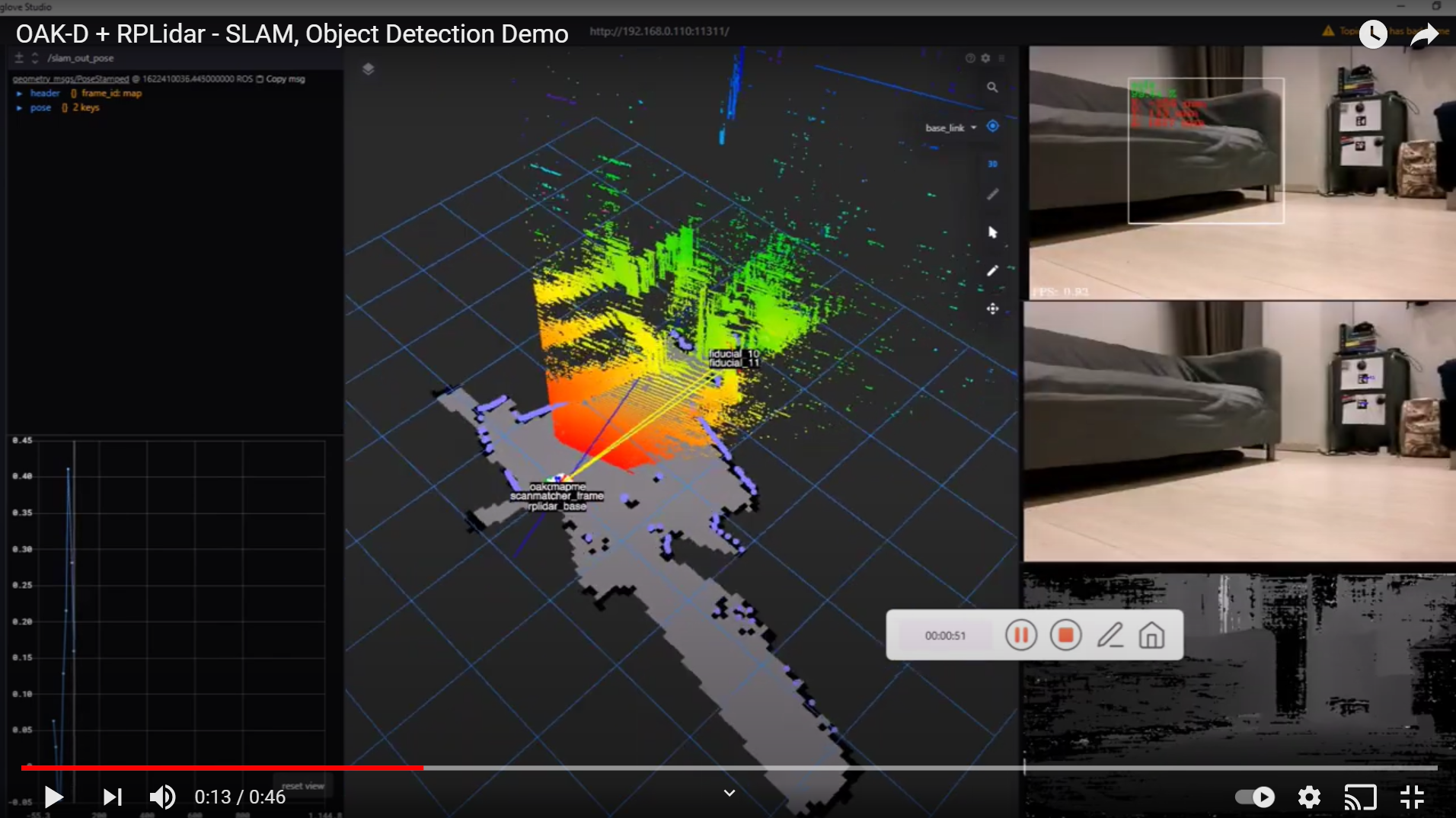

OAK-D and PointClouds: I received the OAK-D a Kickstarter campaign. The product was brand new, and without a lot of software support, especially with ROS. It took me a month to experiment with their API and some experimental ROS packages available at the time. I also worked on making my own ROS driver for it, but soon dropped the idea as the official ROS support starting gaining speed. In April, I first used ROS packages to generate pointclouds from the OAK-D depth stream, and then used these pointclouds to generate a laser scan. I was able to validate this with the RPLidar laser scan. Next, I worked with pointcloud filters, using which I filtered the pointcloud for a better frame rate. I also filtered the RPLidar scan to filter out the region where the sensor is occluded by the OAK-D mounts. The OAK-D also has a RGB camera, so I decided to use that to detect fiducial markers. But the Kickstarter devices came without factory calibration of the RGB camera, so I had to do that first. Then, in May, I implemented fiducial marker detection and fiducial SLAM.

Meanwhile, I was getting busy with work and freelance projects, so I took some time off this robot. It was during this time, I started getting active on Twitter and soon found out about Foxglove. It’s an excellent visualization tool that works on Windows and does not need any separate installation of Linux or ROS. In June, I was able to use Foxglove to visualize the pointclouds and detected fiducial markers. Around this time, Foxglove team members happened to find my blog and reached out to me to write an article about my lockdown project. This spotlight article was shared on all social media platforms, and generated a lot of publicity about my project which really helped me network with other roboticists, students/hobbyists, makers and even led to meetings with startups for potential jobs and freelenace projects. I also learnt how to use Twitter as a networking tool, and its certainly helped get in touch with the right people, better than Linkedin.

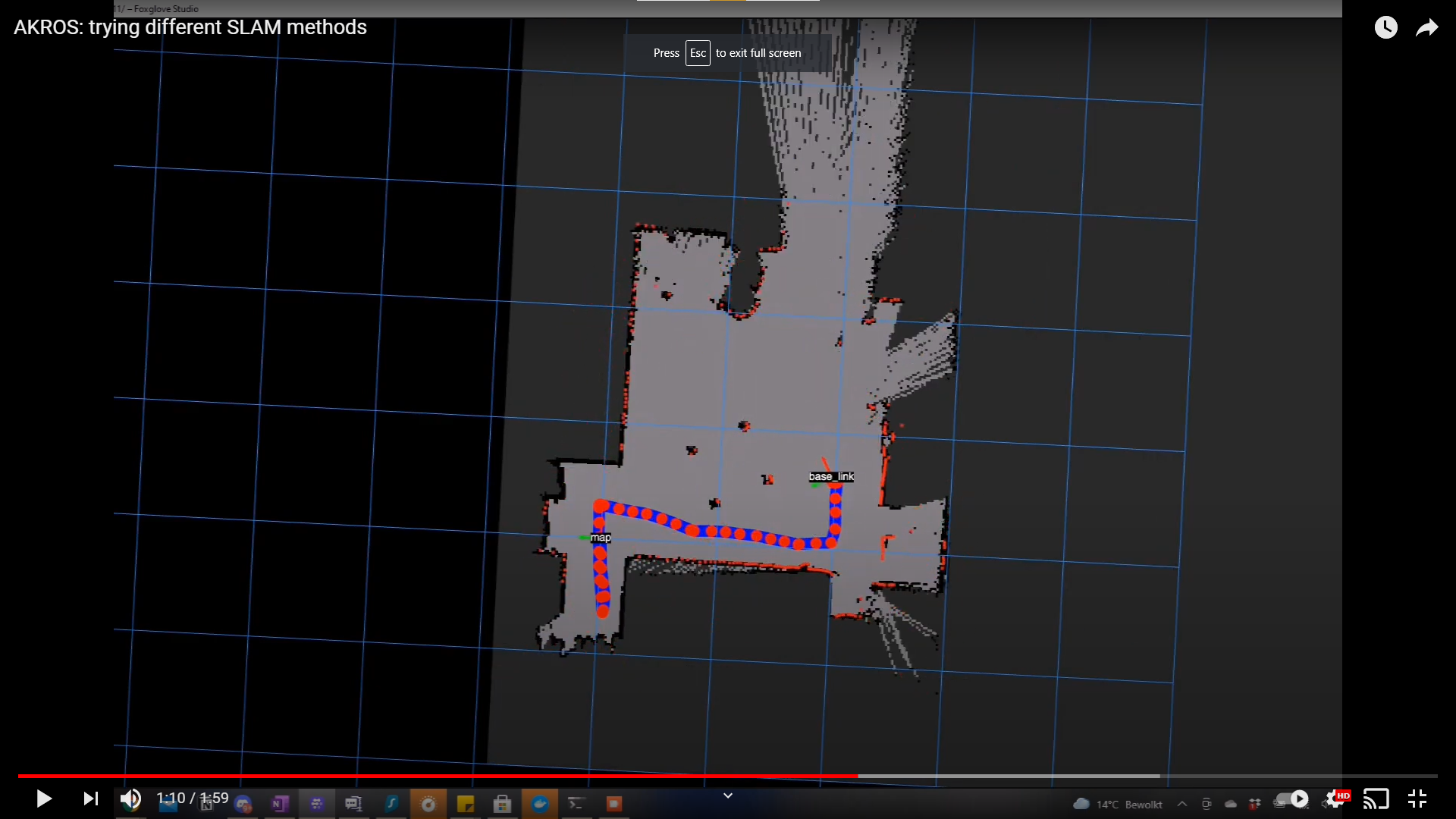

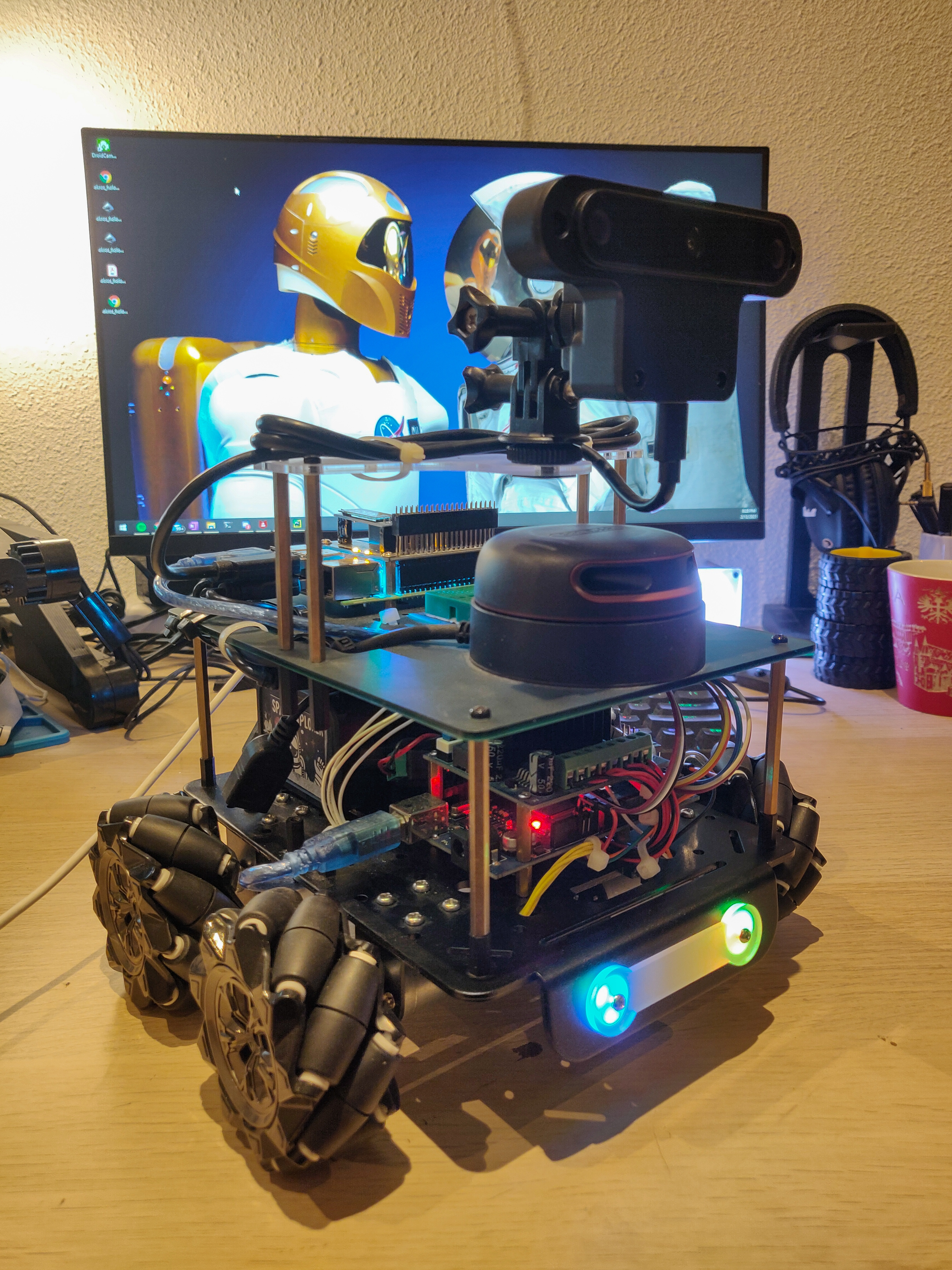

Testing different SLAM methods: By this time, I had tried two SLAM methods already - Fiducial SLAM using the OAK-D and HectorSLAM using the filtered RPLidar scan. I also wanted to try other SLAM methods, but most required an odometry source. While I was yet to implement the low level control and wheel odometry, I decided to get myself an Intel T265 tracking camera. The goal of this was to replicate the same visual-inertial odometry with the OAK-D and then use the T265 to validate. But for now, I had an odometry source. In July, I re-designed the navigation module, to accomodate this new sensor. I switched the OAK-D and the RPLidar positions, to put the lidar higher so that it is not occluded anymore. This meant that I no longer needed the laser scan filter.

Once the parts arrived, I assembled them to the navigation module but I still needed the base module working in order to drive the robot around to generate maps. So, in August, I wired up the base module, and wrote a simple open-loop program to drive the robot using a PS4 controller. This let me practice driving the robot and while doing so, I could also Hector SLAM (and other SLAM methods) by generating maps of my room. In August, I also decided to implement and test GMapping, another commonly used SLAM/Mapping method This implementation also led me to compare these SLAM methods by not only generating maps, but also by using these generated maps to localize the robot. In this update, I also experimented with different batteries, and ended up with a mini UPS that not only works very efficiently, but also allows me to charge it without needing to unplug it from the robot or even turn the robot off. Finally, in September, I tested slam_karto and slam_toolbox and compared all four SLAM methods, ending up with Steve Macenski’s SLAM Toolbox as the preferred choice, at least for now.

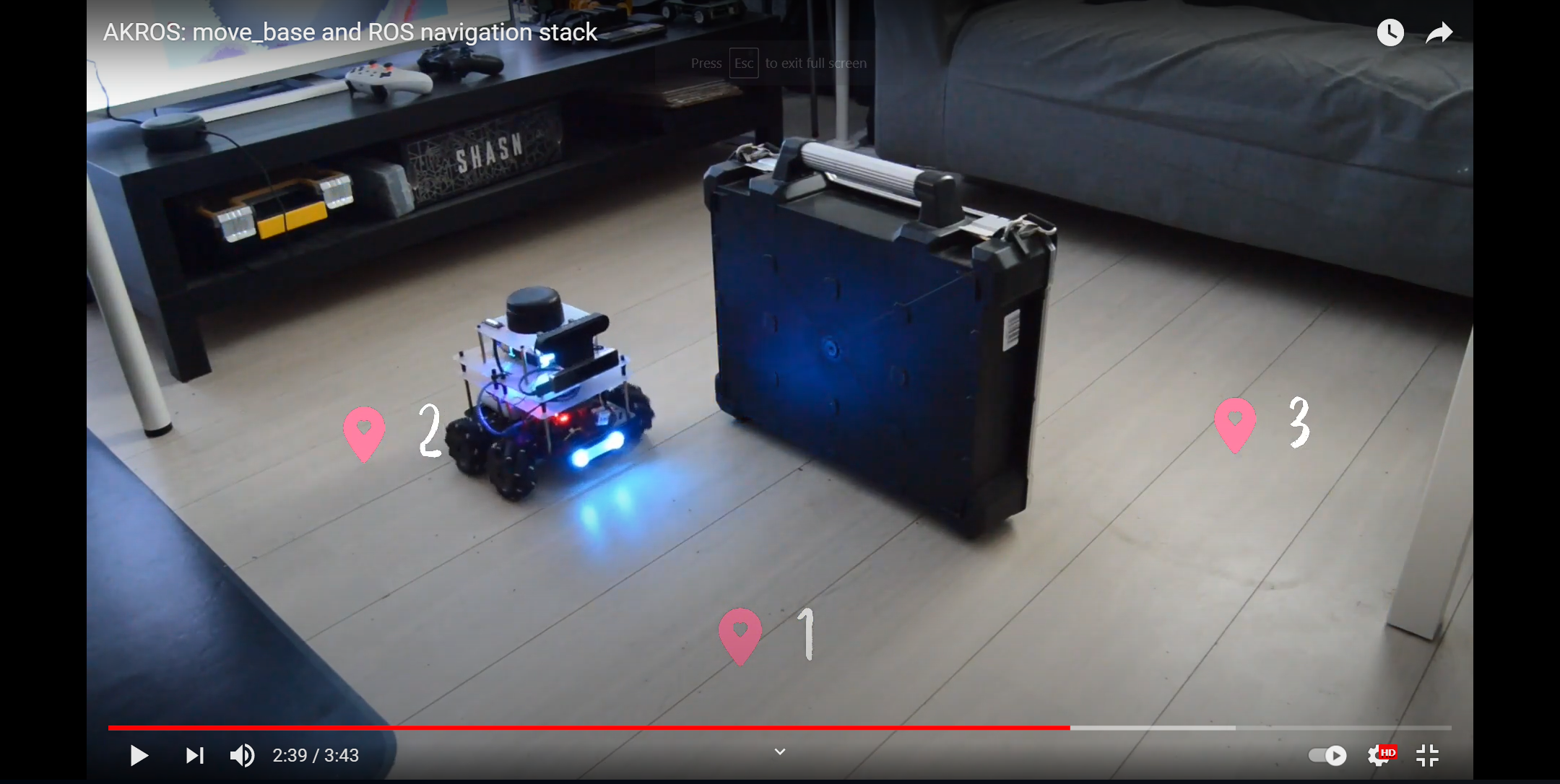

ROS Navigation Stack: By this time in mid September, I had a robot I could drive around manually using a PS4 controller to generate maps of my room, and I could also drive the robot around while localizing within these generated maps. Finally, it was time to get the robot to drive itself. By the end of September, I had the first rudimentary implementation of the ROS navigation stack. Using the lidar scans and the odometry information from the T265, the navigation stack was able to generate trajectories and corresponding linear/angular velocities to user-defined target points within the map. I was quite blown away how it worked on the first try with most parameters with their default values. I also decided to replace the RPLidar A2 with an LD06 Lidar from LDRobot as the RPLidar was quite bulky and induced a lot of vibrations. For now, I used a glue gun to attach the LD06 in place, on the old RPLidar mount.

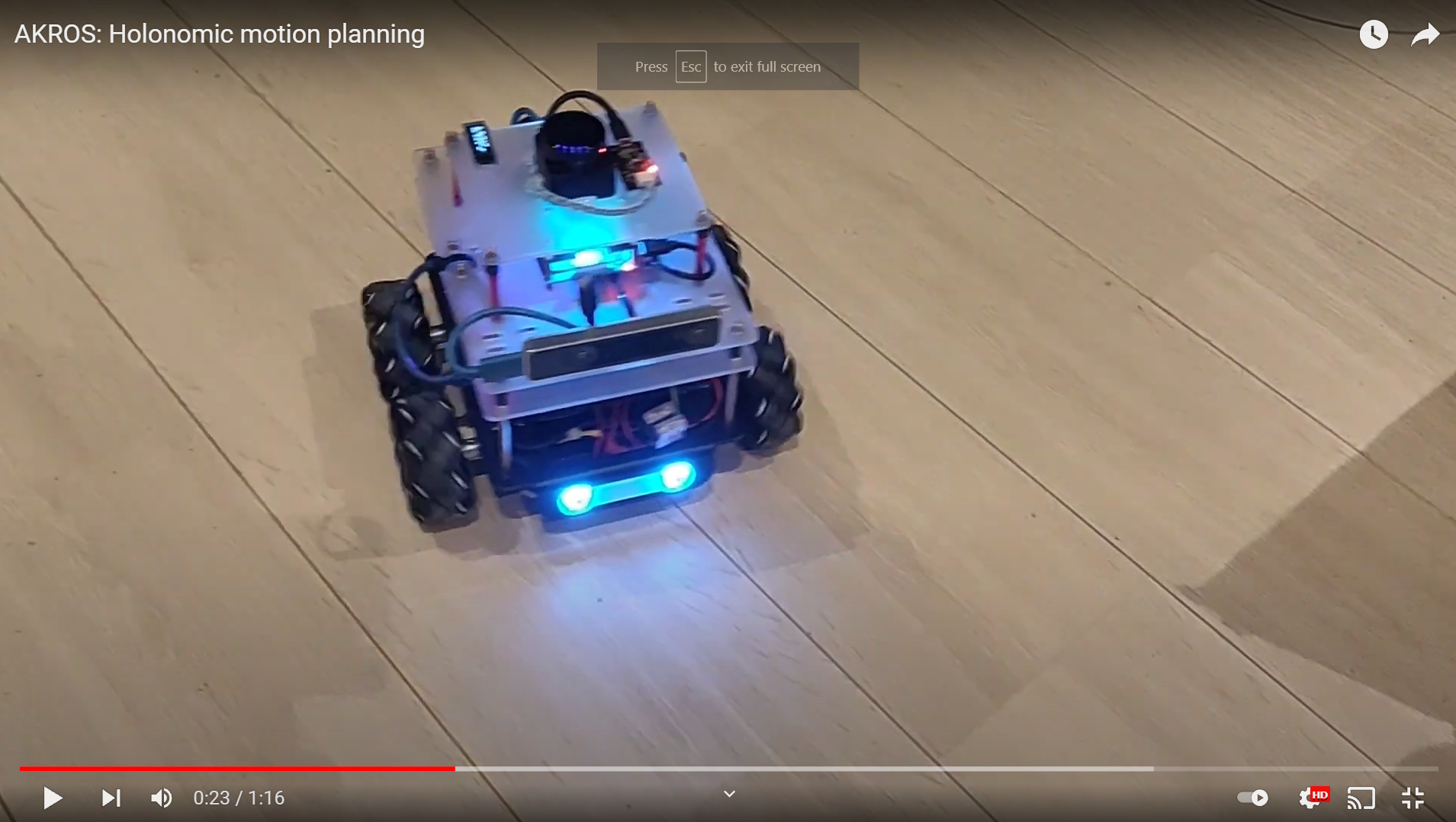

One of the issues with my first implementation of autonomous navigation, was that the robot still moved in a very differential way. The navigation stack only provided strafing velocities to the robot in emergency situations when the robot is too close to an obstacle or during recovery scenarios when the robot is trying to get unstuck. In order for the robot to move in a holonomic fashion, I experimented with available motion planners. Finally, in October, I was able to use dwa_local_planner for autonomous, holonomic motion planning

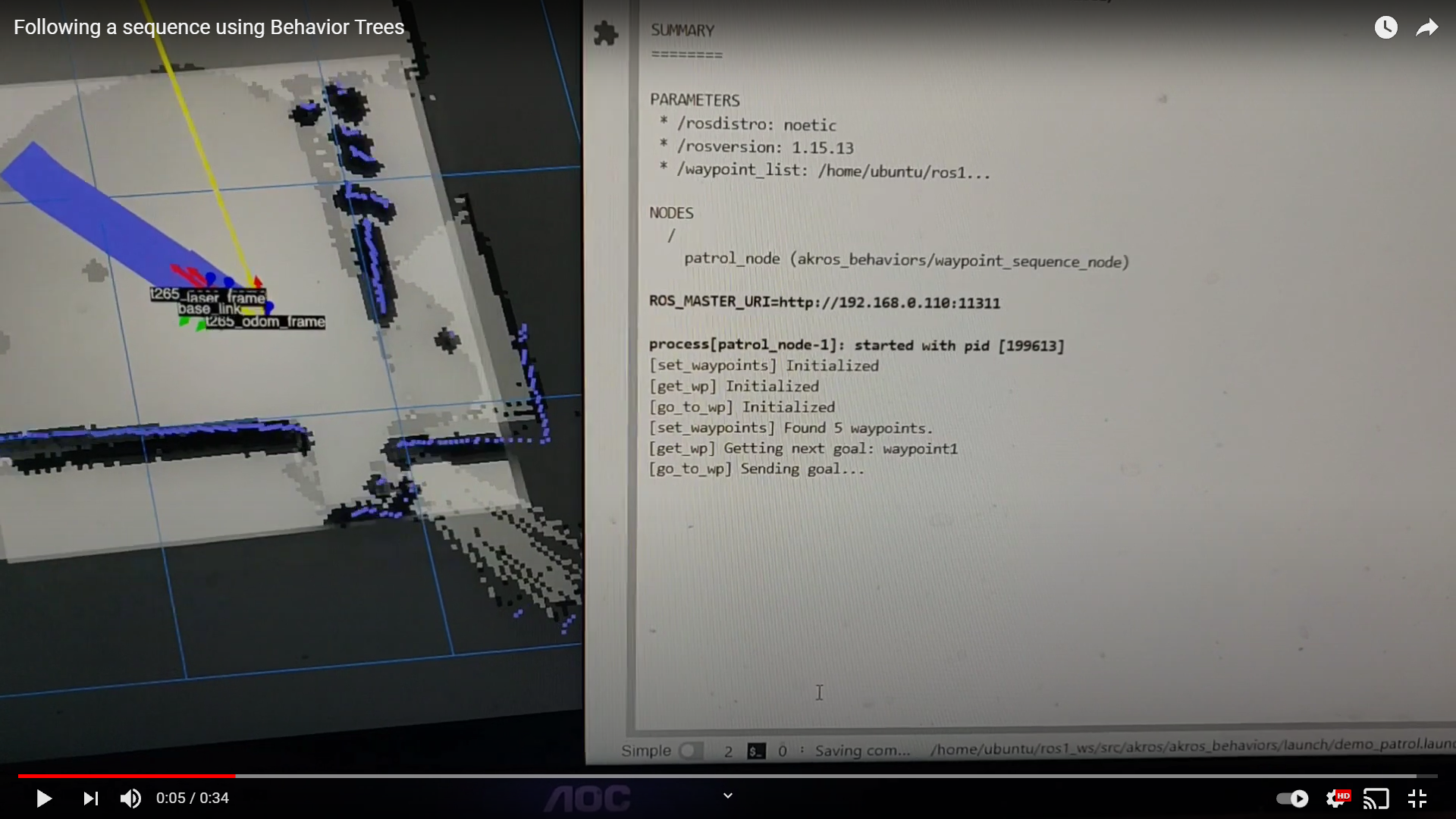

High-level behavior: Once the navigation stack was well tuned and the navigation performance was sufficiently good, I jumped into developing high level behaviors. The first behavior I wanted to implement was waypoint following. I wanted the robot to traverse through a sequence of pre-determined waypoints on a pre-generated map. While I started off playing with state machines, I dediced to explore Behavior Trees as I had never implemented one before. In October, I implemented my first behavior tree to load a waypoint from a file, go to that waypoint and if successful, load the next waypoint and repeat. If unsuccessful, the robot stops. While, this worked very well, It was still a manual process to generate a list of waypoints to follow.

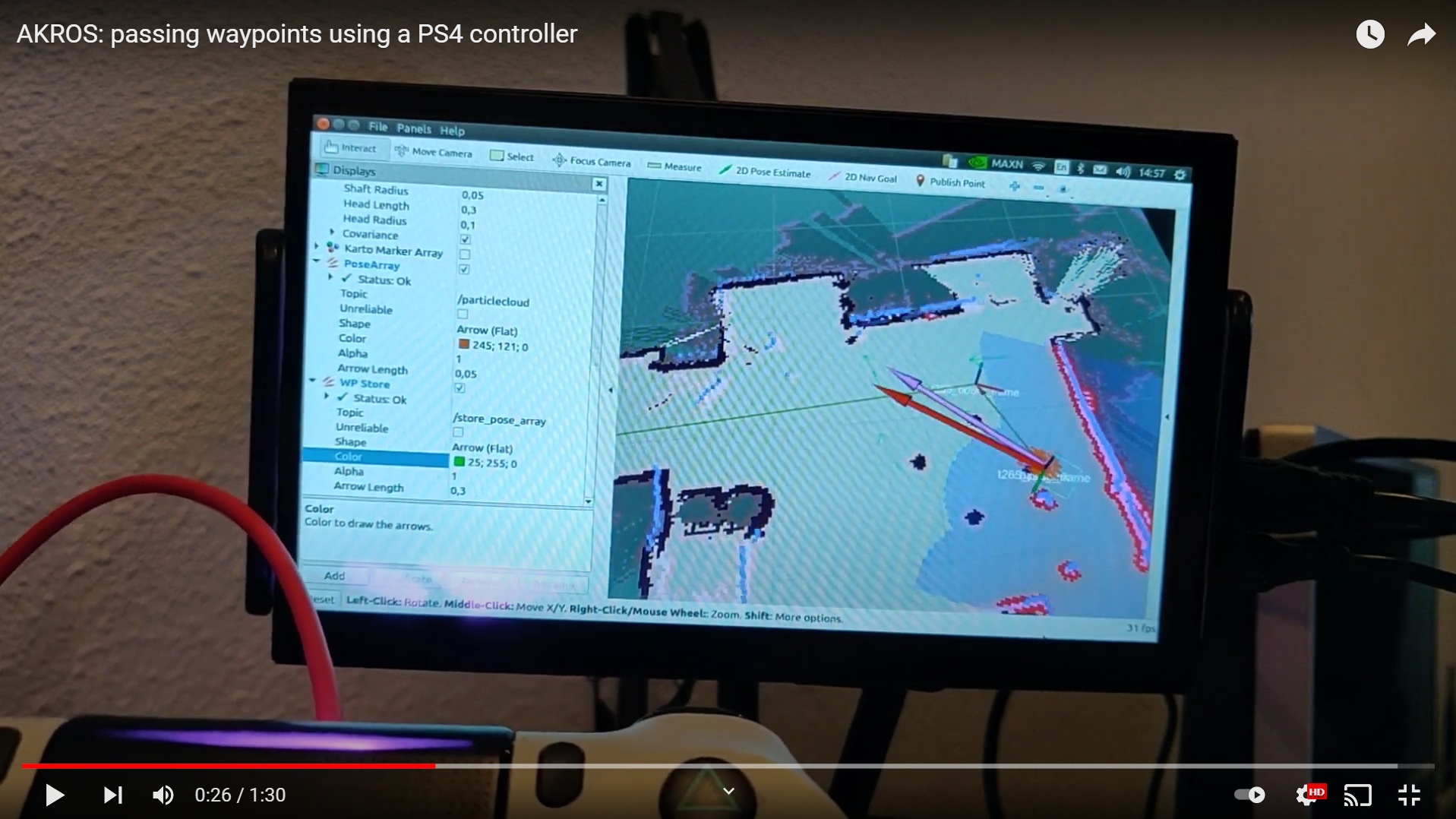

So, I designed and implemented a way to record waypoints using the PS4 controller and play them back when needed. In this update, I also introduced the concept of modes - in teleop mode, the recording mode can be activated which lets the user record a waypoint by pressing a button on the PS4 controller. The waypoint is the estimated pose when the button is pressed, and is stored in a local file. When the robot is switched to the autonomous mode using the PS4 controller, the playback mode can be activated by the press of a button. In this mode, the playback node cycles through the local file with the recorded waypoints and follows them one by one.

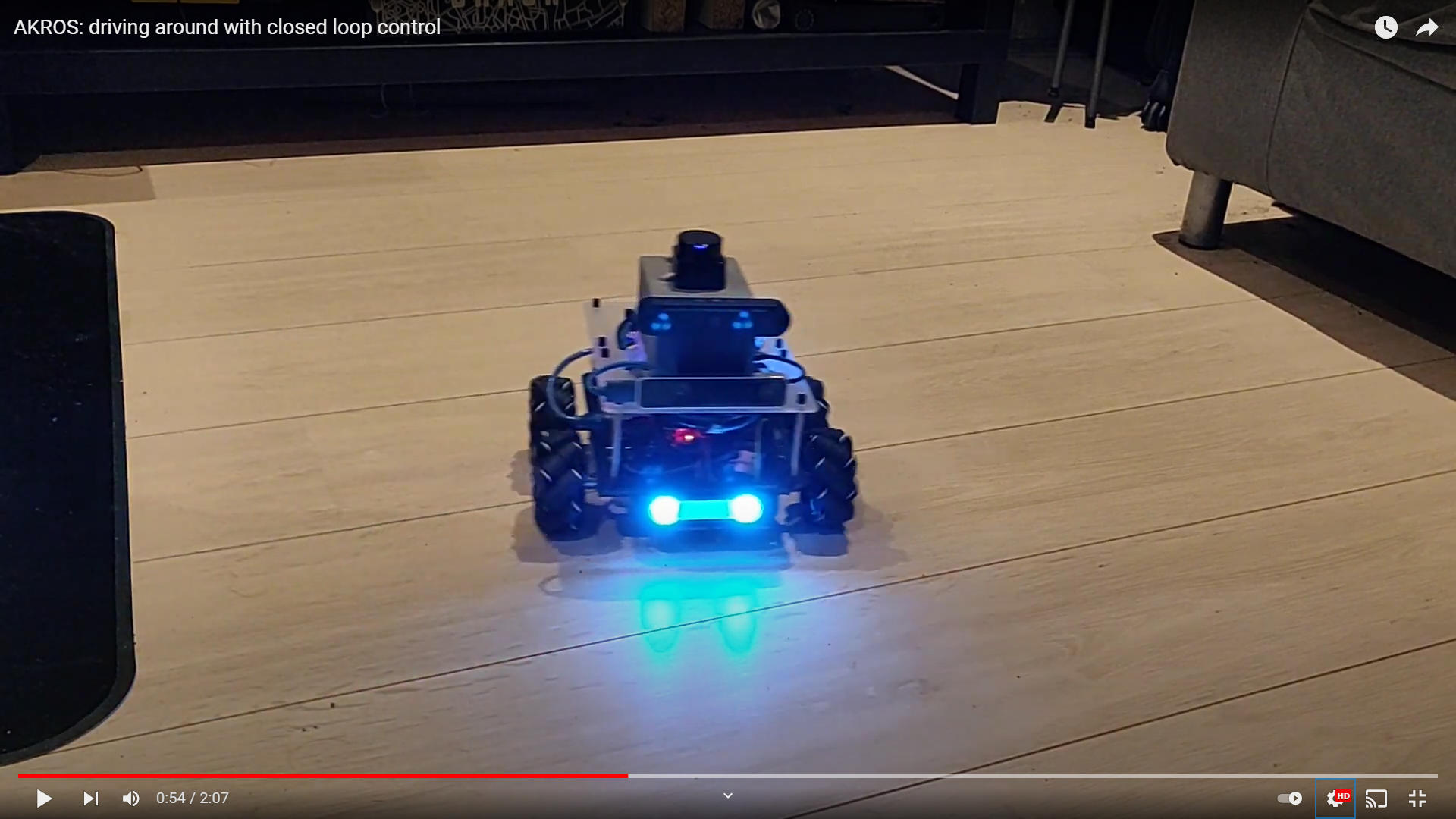

Improving low-level control: After configuring both the ROS navigation stack and the high level behaviors, the robot still did not move very smoothly. The robot only reached its target goal after multiple recovery attempts as it overshot the target several times. This was all due to the open loop controller running on the Arduino. To close the loop, I first needed to wire and test the wheel encoders, and then measure the RPMs of each motor and apply a PID controller. In December, I did just this - I first wired and tested the encoders, then implemented PID controllers for each motor to reach the reference RPM. However, while the closed loop worked fine on the Arduino, I also wanted to publish the robot’s measured velocities to the Raspberry Pi so that I can generate an Odometry transform. This publisher unfortunately did not work due to the serial bus getting overloaded. I had to reconfigure the communication with the Arduino but I was finally able to fix it in my last update of 2021.

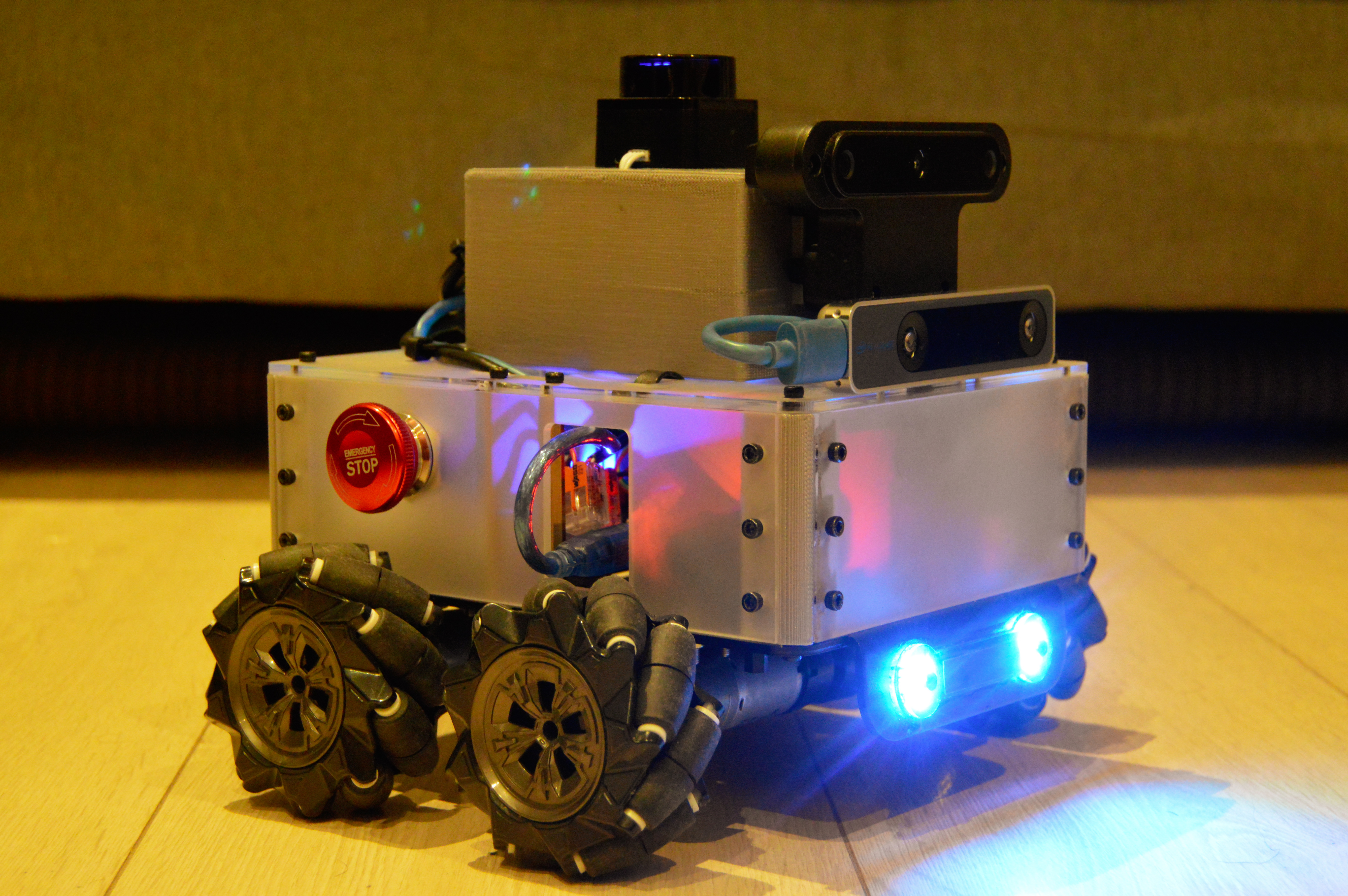

Glow-up: Finally, after the navigation stack was ready, the motor control done and everything was in working order - it was time to add an enclosure to cover up the messy wiring. While my December holidays got cancelled, I spent time designing corner pieces and side panels to enclose the base module. The corner pieces were 3D printed and then brass threaded inserts were attached for extra support. Then, the side panels were laser cut in acrylic and screwed into these corner pieces. I soon realized that this construction was not very rigid, so I superglued the side panels in. Now, the enclosure is one part, that sits between the chassis on the bottom and the navigation module plate on the top. I also added access holes for the Arduino and the power supply, and an emergency stop button to one of the side panels. I also impulse purchased a wireless charging module which turned out to be really efficient, so I decided to add it to the robot. I prototyped it using some spare panels, but it was almost the end of the year and I couldn’t design parts in time to 3D print or laser cut. Its definitely on my to-do list for 2022. Finally, the finished construction, without the wireless charging add-on looks like this

Plans for 2022: The AKROS project is not completed yet as I still have a few things to change. For starters, few weeks ago, I tested a 12V/3A wireless charging module, that I would like to add to the robot and I am currently in the process of designing parts for it, and getting ready to 3D print and laser-cut the parts. I also made the decision of removing the OAK-D from this platform, as I realized that the Raspberry Pi cannot handle both the OAK-D and the ROS Navigation Stack together, even after I upgraded to the 8GB version of the RPi. I had planned on using the OAK-D to implement visual-inertial odometry and replacing the T265, but I couldn’t do that in 2021. So, the T265 remains on the AKROS platform, and the OAK-D (and VIO) would be implemented on another robot. This means I also need to update the AKROS software package and remove dependencises to the OAK-D and its API, and also design new 3D printed parts for the navigation module. Finally, I plan on cleaning up the code, adding some documentation and making the repository public on GitHub. Once this is done, I can continue on my plans for 2022:

- Some time off: 2021 was a hectic year for all of us. It was definitely quite chaotic for me, as I handled my full time job, some freelance projects as well as this robot project. This meant that I was working most weekends and some evenings on weekdays, even during some days off my full-time work. My December vacation plans also fell apart, so I desperately need some time off. Once I’m done with the parts re-design and the code cleanup, I will take a month off from side-projects, also a couple of weeks off from my full time job. I decided to put this as a specific goal for 2022, because taking time off is essential and I need to catch up on that.

- ROS2: I spent some time in 2021 learning about ROS2 and trying out some tutorials. This year, I want to port the AKROS robot from ROS1 Noetic to ROS2 Galactic. Last year, I already implemented and tested the ROS 1-2 Bridge, which gives me a small start towards porting components to ROS2.

- 3D SLAM and VIO: This year I finally want to implement VIO on the OAK-D. I also have pre-ordered the OAK-D Lite, which is expected in April this year. It will be nice to test 3D SLAM and Visual Odometry with and without the IMU (which is the only difference between the two cameras). Once again I will be using ROS1, but if I have done sufficient progress on this, this will also be ported to ROS2.

- First commercial product: I’ve been building robots for a while now - for university, for industry clients and also for myself. I now think I am ready to design and build a product for the commercial market. I want to redesign the base module of the AKROS robot - with low-noise and high torque motors, robust wheels (and suspension) and better improved level control (possibly using ROS2/microROS). I also want to experiment with different drive configurations like 3WD with omniwheels and differential swerve mechanisms.I will get the help from friends/peers and freelancers for this, but I hope to have a simple product (with documentation) and a website ready by the end of this year.

- First ROSCon: I’ve been wanting to visit Japan for quite a while now. First, it was because of Pokemon, then because of robots and humanoids, and since a few years, its been about Sushi (ever since I tried it for the first time in 2017). I also found out on Twitter that the international ROS conference ROSCon will be held in Kyoto in 2022. I really hope the pandemic doesn’t mess this up because I really couldn’t find a better time to visit Japan. I also really need a proper holiday after the last two years, so I’m keeping my fingers crossed.

If you have read through this brain-dump of mine and reached this point, thank you! I have been writing this blog as a journal for myself, but I found out that people have been finding these articles and its helping them build and program their own robots. I have also received some really nice comments, feedback and questions from readers and I really appreciate that. As a thank you, here’s a video of the robot’s first trip outside my apartment and onto carpet in my building’s hallway.

As you can see, the motors are still quite noisy and looks like the PID controller also needs to be retuned for this scenario, but these are things I plan on improving in 2022. I’ve already started by taking the OAK-D off. Lots of other big things are happening this year, and only time will tell how much of it I actually accomplish.